Yishu Qiu, Manuele Aufiero, Kan Wang (Tsinghua University), Massimiliano Fratoni

There is an increasing interest to couple Monte Carlo (MC) transport calculations to depletion/burnup codes since Monte Carlo codes can provide exact flux distributions or cross sections. One of the main concerns about using a MC transport-depletion method is how uncertainties from Monte Carlo statistical uncertainties as well as nuclear data uncertainties are be propagated between the Monte Carlo codes and burnup codes. This project is going to develop sensitivity and uncertainty analysis capabilities in RMC-Depth which is an in-coupling Monte Carlo transport-depletion code developed by Tsinghua University, China. To be more specific, the goals of this project are:

There is an increasing interest to couple Monte Carlo (MC) transport calculations to depletion/burnup codes since Monte Carlo codes can provide exact flux distributions or cross sections. One of the main concerns about using a MC transport-depletion method is how uncertainties from Monte Carlo statistical uncertainties as well as nuclear data uncertainties are be propagated between the Monte Carlo codes and burnup codes. This project is going to develop sensitivity and uncertainty analysis capabilities in RMC-Depth which is an in-coupling Monte Carlo transport-depletion code developed by Tsinghua University, China. To be more specific, the goals of this project are:

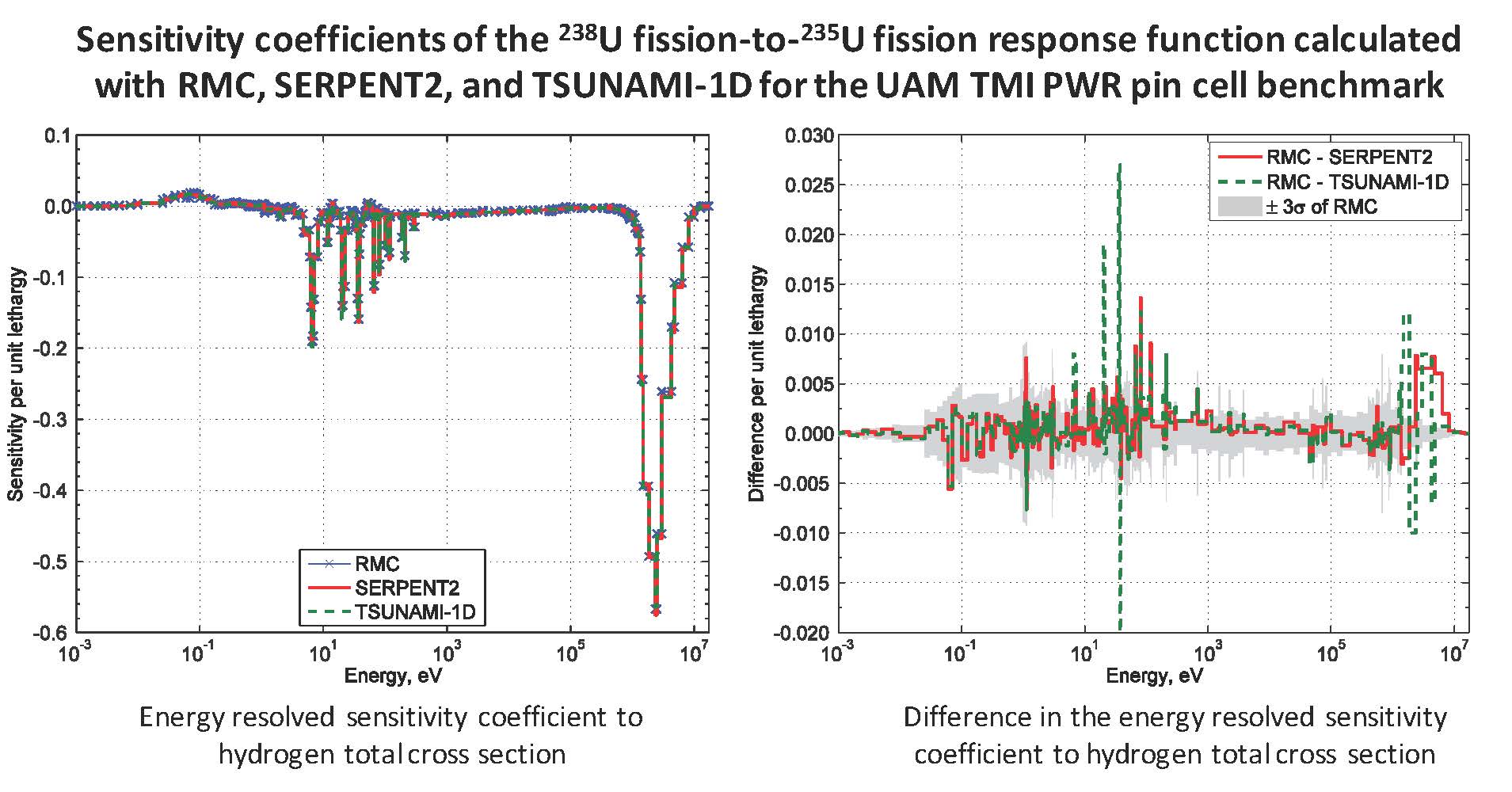

1. Study methods suitable for computing k-eigenvalue sensitivity coefficients with regard to the continuous-energy cross sections and implement them in RMC; conduct sensitivity and uncertainty analysis of the effective multiplication factor to nuclear data uncertainties in the transport calculations.

2. Study methods appropriate for computing general response sensitivity coefficients with regard to the continuous-energy cross sections and implement them in RMC; conduct sensitivity and uncertainty analysis of general responses in the form of linear response functions, such as relative powers, isotope conversion ratios, multi-group cross sections, and bilinear response functions, such as adjoint-weighted kinetic parameters, to nuclear data in the transport calculations.

3. Study the methods suitable for analysis and uncertainty propagation in Monte Carlo transport-burnup calculations. With the proposed methods, propagate uncertainties in the Monte Carlo transport-burnup calculations that come from nuclear data, the Monte Carlo statistics, the isotope number densities, and the cross-correlations between the nuclear data and the number densities. These effects should be analyzed separately in each burnup step of the burnup calculations.

4. Study the methods suitable for uncertainty qualifications for other parameters such as temperature and system dimensions.

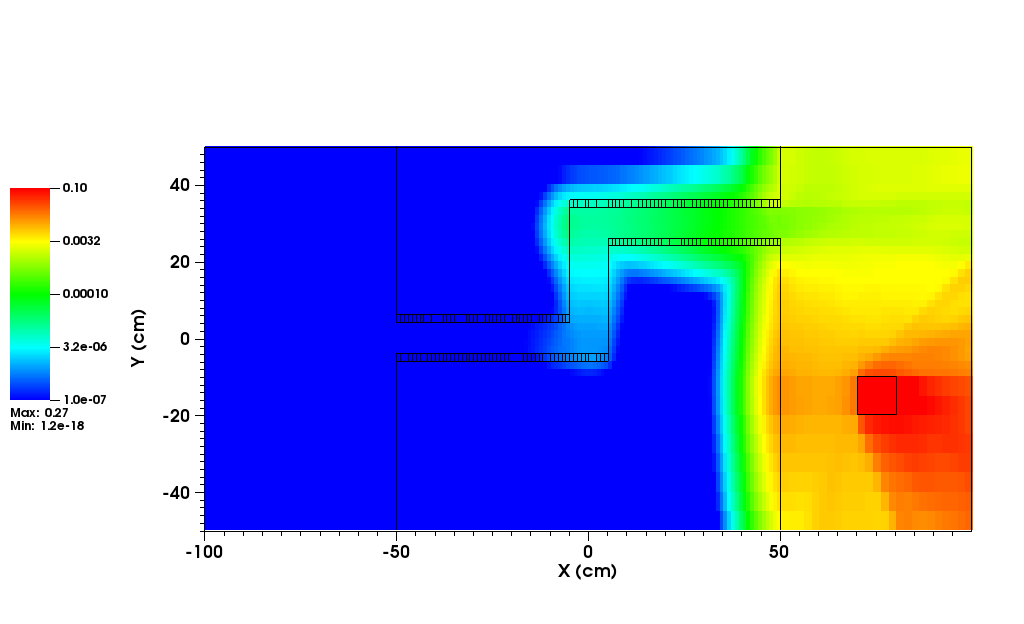

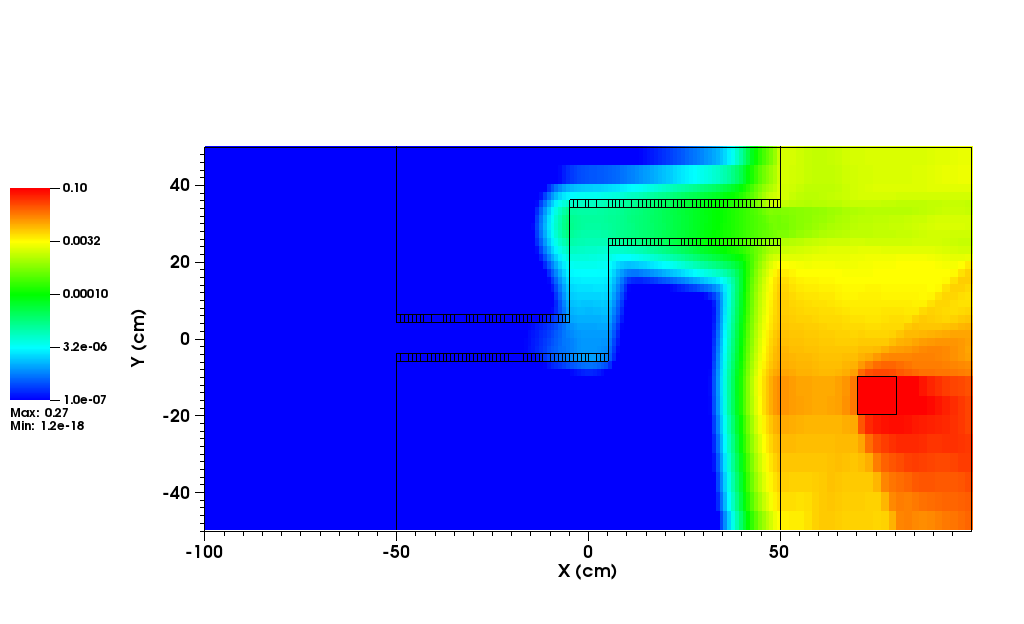

Hybrid methods for radiation transport aim to use the speed and uniform uncertainty distribution obtained from deterministic transport to accelerate and improve performance in Monte Carlo transport. An effective use of this type of transport hybridization can lead to a reduced uncertainty in the solution and/or a faster time to a solution. However, not all hybrid methods work for all types of radiation transport problems. In problems where the method is not well-suited for the problem physics, a hybrid method may perform more poorly than analog Monte Carlo, leading to wasted computer time and energy, or even no acceptable solution.

Hybrid methods for radiation transport aim to use the speed and uniform uncertainty distribution obtained from deterministic transport to accelerate and improve performance in Monte Carlo transport. An effective use of this type of transport hybridization can lead to a reduced uncertainty in the solution and/or a faster time to a solution. However, not all hybrid methods work for all types of radiation transport problems. In problems where the method is not well-suited for the problem physics, a hybrid method may perform more poorly than analog Monte Carlo, leading to wasted computer time and energy, or even no acceptable solution.

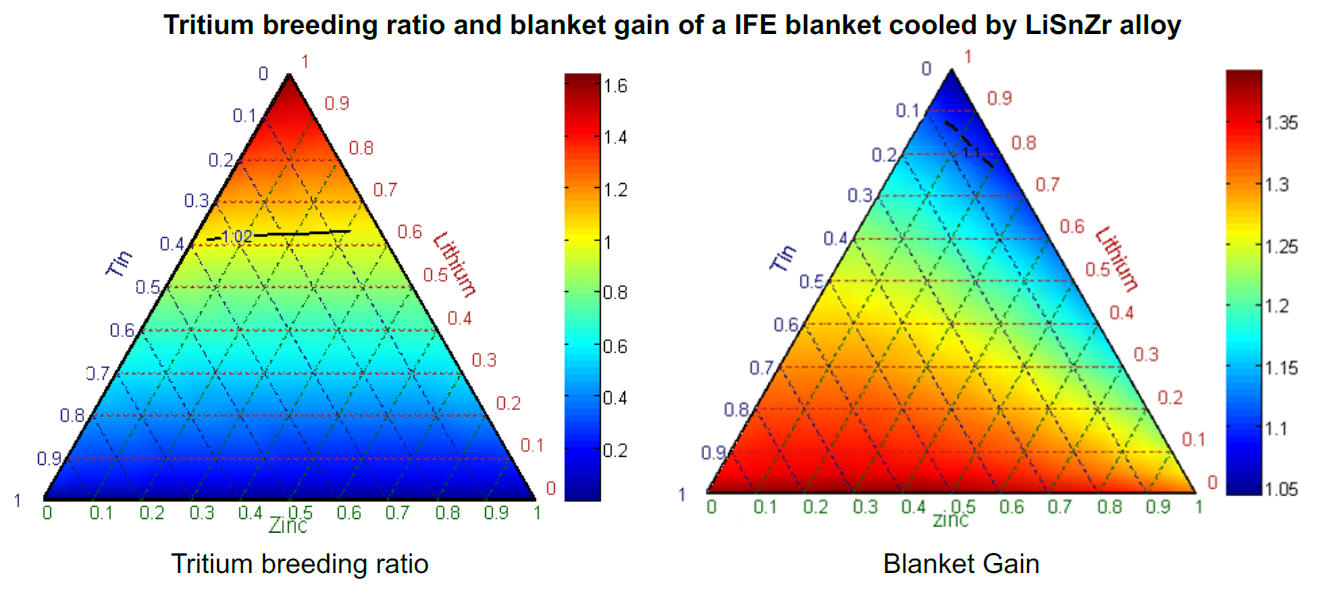

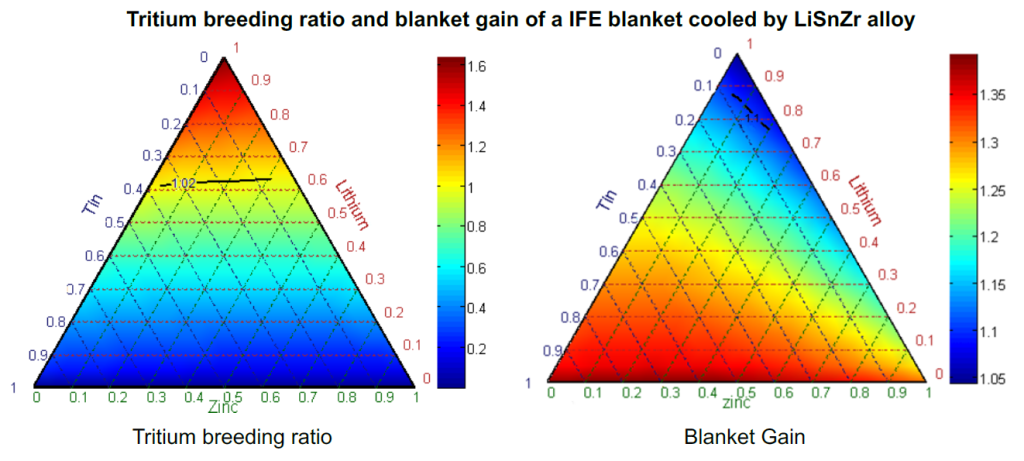

The goal of this work is improved safety and performance for fusion energy. Lithium is often the preferred choice as breeder and coolant in fusion blankets as it offers excellent heat transfer and corrosion properties and, most importantly, has a very high tritium solubility that results in very low levels of tritium permeation throughout the facility infrastructure. However, lithium metal vigorously reacts with air and water, exacerbating plant safety concerns. Consequently, Lawrence Livermore National Laboratory (LLNL) is attempting to develop a lithium-based alloy—most likely a ternary alloy—that maintains the beneficial properties of lithium (e.g. high tritium breeding and solubility) while reducing overall flammability concerns for use in the blanket of the Inertial Fusion Energy (IFE) power plant.

The goal of this work is improved safety and performance for fusion energy. Lithium is often the preferred choice as breeder and coolant in fusion blankets as it offers excellent heat transfer and corrosion properties and, most importantly, has a very high tritium solubility that results in very low levels of tritium permeation throughout the facility infrastructure. However, lithium metal vigorously reacts with air and water, exacerbating plant safety concerns. Consequently, Lawrence Livermore National Laboratory (LLNL) is attempting to develop a lithium-based alloy—most likely a ternary alloy—that maintains the beneficial properties of lithium (e.g. high tritium breeding and solubility) while reducing overall flammability concerns for use in the blanket of the Inertial Fusion Energy (IFE) power plant.